Data labelling is a crucial step in machine learning and artificial intelligence (AI) applications. It involves the process of assigning meaningful and accurate annotations or labels to raw data, such as images, text, audio, or video, to make it understandable and usable for training machine learning models. Here are several reasons why data labelling is done:

- Training machine learning models

Labelled data is used to train and build machine learning models. Models learn from the labelled examples and use that knowledge to make predictions or perform tasks based on new, unlabelled data.

- Supervised learning

In supervised learning, the most common approach in machine learning, labelled data acts as the ground truth that guides the learning process. The labelled examples provide the correct answers or outputs for the given inputs, allowing the model to learn patterns, correlations, and rules.

- Creating training datasets

Data labelling is essential for creating high-quality training datasets. These datasets consist of labelled samples that represent a wide range of real-world scenarios and cover the different variations and classes that the model needs to recognise or classify.

- Improving model accuracy

Accurate and comprehensive labelling enhances the quality of training data, leading to improved model accuracy. The labels provide clear instructions and define the desired output, helping the model learn the correct associations between inputs and outputs.

- Benchmarking and evaluation

Labelled data is also used for benchmarking and evaluating the performance of machine learning models. By comparing the model's predictions with the known labels, researchers and developers can measure the model's accuracy, identify areas for improvement, and track progress over time.

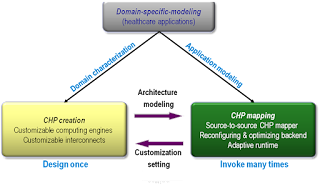

- Domain-specific applications

Data labelling is often tailored to specific domains or applications. For example, in medical imaging, data labelling can involve identifying and annotating regions of interest, such as tumours or abnormalities. In natural language processing, data labelling may include sentiment analysis or named entity recognition.

- Data quality control

Data labelling is a means of ensuring data quality and consistency. By carefully labelling the data, experts can identify and correct any errors or inconsistencies, ensuring that the training data is reliable and of high quality.

- Ethical considerations

Data labelling also plays a role in addressing ethical concerns in AI. For example, when training models for sensitive topics, such as hate speech or offensive content detection, labelling guidelines can be designed to enforce ethical standards and prevent biased or harmful outcomes.

Overall, data labelling is a critical step in the machine learning pipeline, enabling the development of accurate and effective models. It bridges the gap between raw data and machine learning algorithms, making the data understandable and useful for training intelligent systems.

0 Comments